What is deep learning?

Deep learning is a subset of machine learning and artificial intelligence that uses artificial neural networks with multiple layers to learn from data and make intelligent decisions. It is a powerful technique that teaches computers to process information in a way that mimics the neural networks of the human brain, enabling machines to perform complex tasks that were once exclusive to human capabilities.

At its core, deep learning uses multi-layered structures of algorithms called neural networks to analyze data and draw conclusions similar to how humans would. These networks are designed to automatically discover and learn patterns within large amounts of data, transforming raw information into increasingly abstract and sophisticated representations.

Deep learning enables digital systems to learn and make decisions based on unstructured, unlabeled data. Rather than simply responding to predefined rules, deep learning systems build knowledge from examples and use that knowledge to exhibit intelligent behavior. This approach has revolutionized fields such as computer vision, natural language processing, speech recognition, and autonomous systems.

The distinguishing characteristic of deep learning is the presence of multiple hidden layers in the neural network architecture. A deep neural network typically has at least two hidden layers, though modern architectures often contain many more. These multiple layers allow the model to learn hierarchical representations of data, with earlier layers identifying basic patterns and deeper layers recognizing increasingly complex and abstract features.

How does deep learning work?

Deep learning operates through artificial neural networks that are inspired by the biological neural networks in the human brain. These networks consist of layers of interconnected nodes called neurons, which collaborate to process and transform input data.

Neural Network Structure

A deep neural network is composed of three main types of layers –

Input Layer – This layer receives raw data, such as images, text, or numerical values. Each neuron in the input layer represents a specific feature of the input data. The input data is typically converted into numerical values that the network can process.

Hidden Layers – These intermediate layers perform the actual learning and feature extraction. Deep learning networks contain multiple hidden layers, which is what makes them “deep”. Each hidden layer transforms the data using weighted connections and activation functions, progressively extracting more abstract and complex patterns. The first layers might identify basic features like edges in an image, while deeper layers recognize more sophisticated patterns like shapes or entire objects.

Output Layer – The final layer produces the network’s prediction or classification. For instance, in an image classification task, the output layer might determine which category the input image belongs to.

Information Flow and Learning Process

Information flows through the network in a forward direction during inference. Each neuron receives inputs from the previous layer, applies a weighted sum to these inputs, adds a bias term, and then passes the result through an activation function that introduces non-linearity. These activation functions enable the network to learn complex, non-linear relationships in the data.

The connections between neurons are represented by weights, which are numerical values that determine the strength and influence of each connection. During training, the network learns by adjusting these weights and biases to minimize prediction errors. This optimization process typically uses backpropagation and gradient descent algorithms.

When training a deep learning model, the network processes examples from a labeled dataset and compares its predictions to the actual labels. The difference between the predicted and actual outputs, called the loss or error, is calculated and then propagated backward through the network. The weights are updated based on this error, gradually improving the network’s performance through iterative training cycles.

Key Mechanisms

Deep learning networks employ several important mechanisms that enable effective learning -

Weighted Connections – Each connection between neurons has an associated weight that amplifies or diminishes the influence of that connection. These weights are the parameters the network learns during training.

Activation Functions – Non-linear activation functions like sigmoid, tanh, and ReLU (Rectified Linear Unit) introduce the ability to model complex patterns and relationships in data.

Feature Hierarchy – The layered structure allows the network to automatically learn hierarchical representations, with each layer building upon the features learned by previous layers. This eliminates the need for manual feature engineering, as the network discovers relevant features directly from the data.

Backpropagation – This algorithm calculates how much each weight contributed to the prediction error and updates the weights accordingly to improve future predictions.

The ability of deep learning networks to automatically extract meaningful features from raw data and learn complex hierarchical representations is what makes them exceptionally powerful for handling tasks involving unstructured data like images, audio, and text.

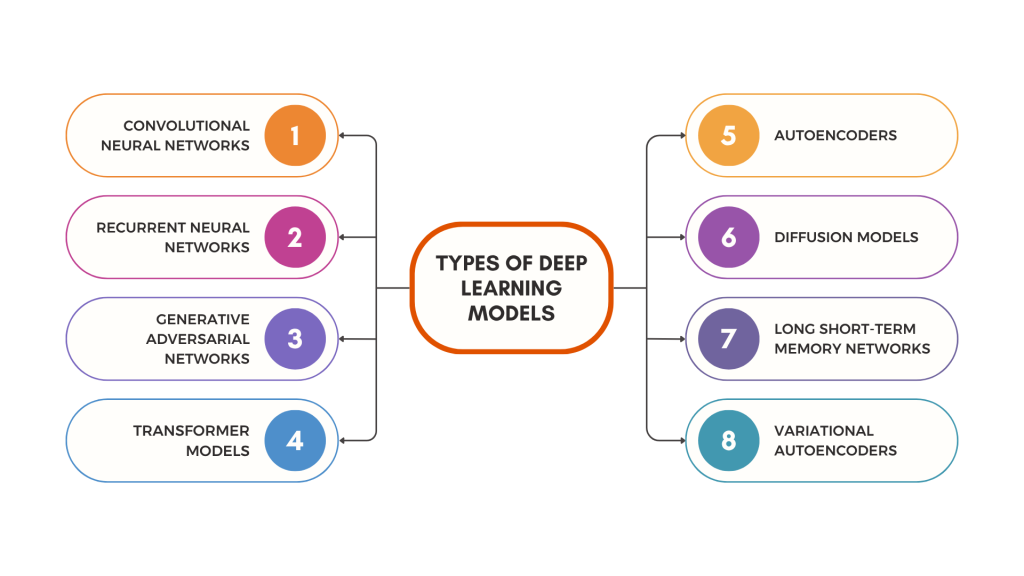

Types of deep learning models

Deep learning encompasses various neural network architectures, each designed to address specific types of problems and data structures. These architectures differ in their design, information flow patterns, and applications.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks are specialized architectures designed to process grid-like data, particularly images and videos. CNNs utilize convolutional layers that apply filters or kernels to input data, enabling the network to detect spatial patterns and features such as edges, textures, and shapes.

A typical CNN architecture consists of three main components -

Convolutional Layers – These layers perform feature extraction by sliding filters across the input to create feature maps that highlight specific patterns.

Pooling Layers – These layers reduce the spatial dimensions of feature maps while retaining the most important information, typically using operations like max pooling or average pooling.

Fully Connected Layers – After several convolutional and pooling operations, these layers combine all extracted features to produce final predictions or classifications.

CNNs excel at tasks involving visual data, including image classification, object detection, facial recognition, and semantic segmentation. Their ability to automatically learn spatial hierarchies makes them indispensable in computer vision applications.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks are designed to handle sequential data where the order of inputs matters. Unlike feedforward networks, RNNs contain recurrent connections that create feedback loops, allowing information to persist and enabling the network to maintain memory of previous inputs.

This memory capability makes RNNs suitable for processing time-series data, sequential patterns, and tasks where context from earlier inputs influences current predictions. Applications include speech recognition, language modeling, text generation, and time series forecasting.

However, standard RNNs often struggle with learning long-term dependencies due to the vanishing gradient problem, where gradients become too small during training over many time steps.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks are a unique deep learning architecture consisting of two neural networks that compete against each other in a zero-sum game. Introduced by Ian Goodfellow in 2014, GANs have revolutionized generative modeling.

The GAN architecture comprises two components -

Generator Network – Creates new synthetic data samples by learning patterns from the training dataset. The generator attempts to produce data realistic enough to fool the discriminator.

Discriminator Network – What is deep learning – Acts as a classifier that distinguishes between real data from the training set and fake data produced by the generator.

During training, the generator and discriminator engage in an adversarial process where the generator improves its ability to create realistic data while the discriminator enhances its detection capabilities. Training continues until the discriminator can no longer reliably distinguish fake from real data, indicating that the generator has learned to produce highly realistic outputs.

GANs are widely used for image generation, image-to-image translation, creating photorealistic images, video synthesis, data augmentation, and producing novel content across various domains.

Transformer Models

Transformers represent a paradigm shift in processing sequential data. Unlike RNNs that process data sequentially, transformers use a self-attention mechanism that analyzes entire sequences simultaneously, allowing them to capture long-range dependencies more effectively.

Key advantages include -

- Parallel processing capabilities that dramatically accelerate training

- Superior handling of both short and long sequences

- Effective modeling of dependencies regardless of position in the sequence

Transformers power breakthrough models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), which have achieved unprecedented results in natural language processing tasks including text generation, language understanding, machine translation, and document classification.

The self-attention mechanism allows transformers to weigh the importance of different parts of input data, making them highly effective for complex language tasks. However, they require substantial computational resources and memory due to their larger parameter counts.

Autoencoders

Autoencoders are unsupervised learning neural networks designed for dimensionality reduction, feature learning, and data compression. An autoencoder consists of two primary components –

Encoder – Compresses input data into a lower-dimensional latent space representation, capturing the most important features.

Decoder – Reconstructs the original input from the compressed latent representation.

The network is trained to minimize the reconstruction error – the difference between the original input and the reconstructed output. By learning to compress and reconstruct data effectively, autoencoders capture meaningful patterns and structures within the data.

Autoencoders are used for feature extraction, noise reduction, anomaly detection, and data preprocessing.

Diffusion Models

Diffusion Models are a class of generative models that create data – such as images, audio, or video by gradually transforming random noise into structured outputs. They work by learning two processes – a forward diffusion process that progressively adds Gaussian noise to real data, and a reverse diffusion process that learns to remove that noise step-by-step. Once trained, the model can start from pure noise and iteratively denoise it to produce new, high-quality samples that resemble the training data distribution.

These models combine the strengths of earlier generative architectures, such as the stability of Variational Autoencoders (VAEs) and the realism of Generative Adversarial Networks (GANs), without many of their drawbacks. Their ability to generate highly detailed and coherent images has made them the foundation for state-of-the-art systems like Stable Diffusion, DALL·E 3, and Imagen. Diffusion models can also be conditioned on prompts, sketches, or other data, allowing for precise control over generated content.

Long Short-Term Memory Networks (LSTMs)

Long Short-Term Memory networks are a specialized type of RNN specifically designed to overcome the vanishing gradient problem and learn long-term dependencies in sequential data. Introduced by Hochreiter and Schmidhuber in 1997, LSTMs have become essential for tasks requiring the model to remember information over extended periods.

The LSTM architecture incorporates a memory cell and three types of gates that regulate information flow -

Forget Gate – Determines what information from the previous cell state should be discarded or retained.

Input Gate – Controls what new information should be added to the cell state.

Output Gate – Regulates what information from the cell state should be output and passed to the next time step.

These gating mechanisms allow LSTMs to selectively remember or forget information, making them highly effective for language modeling, machine translation, speech recognition, video analysis, and time series prediction. Bidirectional LSTMs process sequences in both forward and backward directions, providing even better context understanding.

Variational Autoencoders (VAEs)

Variational Autoencoders are generative models that extend traditional autoencoders by introducing probabilistic encoding. Instead of mapping input to a single fixed point in latent space, VAEs encode data as probability distributions.

The VAE architecture includes -

Encoder – Maps input data to probability distributions in latent space, typically outputting mean and standard deviation parameters that define a Gaussian distribution for each feature.

Latent Space Sampling – Randomly samples from the learned distributions, introducing variability that enables generation of new, diverse data samples.

Decoder – Reconstructs data from the sampled latent representation.

VAEs are trained using a loss function that combines reconstruction loss and Kullback-Leibler divergence, which regularizes the latent space to follow a standard distribution. This regularization creates a continuous, structured latent space that supports generating realistic new data samples.

VAEs are applied to generative tasks, data augmentation, anomaly detection, and creating variations of existing data.